|

Health Monitoring Module |

The Health Monitoring control module of the Sun Control Station software enables you to monitor the status of various parameters of your managed hosts. This document explains the features and services available through the Health Monitoring control module. These include:

Control Station software enables you to monitor the status of various parameters of your managed hosts. This document explains the features and services available through the Health Monitoring control module. These include:

Monitoring in the Health Monitoring module is based on polling and events. This means that health status data is acquired either by the Control Station initiating a poll to read the client-state information from each host, or by the managed host informing the Control Station immediately upon encountering a problem (an event).

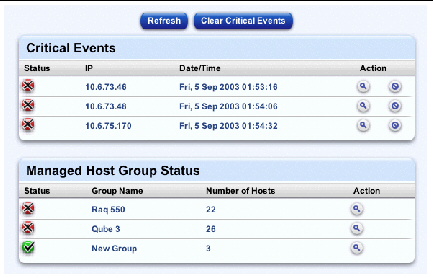

FIGURE 1 shows the Critical Events and the Managed Host Group Status tables.

The time stamp given for an event in the Health Monitor tables reflects the time of its last change in status, for instance from yellow to red.

If a critical event is present on the Control Station, a Status Alert is indicated in the top left corner of the user interface (UI). A critical event results when a transition to a "warning" or "critical" event is detected or generated (meaning that a yellow or red state is returned during health polling).

A critical event can involve any of the services or hardware components on a managed host.

This section alerts you to some difficulties you might encounter using the Health Monitoring module. These difficulties tend to arise when a particular host is managed by more than one Control Station.

The Health Monitor settings (for example, the CPU alarm thresholds) can be changed from any Control Station. When the settings are changed on a Control Station, the new values are propagated to all of that Control Station's managed hosts.

The values from the most recent settings changes overwrite any earlier values on the managed host. However, the settings that appear in the interfaces of the other Control Stations do not update to reflect the most recent settings changes.

For best practice, if more than one Control Station manages a given host, ensure that the Health Monitor settings on each of these Control Stations are set to the same values.

This problem arises when a single host is being managed by two (or more) Control Stations, and:

Since the Health Monitoring control module is designed to receive LOM information when it is available, the Health Monitor tables on all Control Stations will display this LOM information, even though the LOM control module has not been installed on all Control Stations.

This is not a bug or a malfunction. It just means that you may see LOM information displayed in the Health Monitor tables when you would not expect to see it.

When you click the Health Monitor menu item, the submenu items allow you to view the current status or update the status of the services and hardware components for managed hosts.

The Health Summary submenu item displays a summary of the health status data for the managed hosts.

When you click on the Health Summary submenu item, the Critical Events and Managed Host Group Status tables appear; see FIGURE 1.

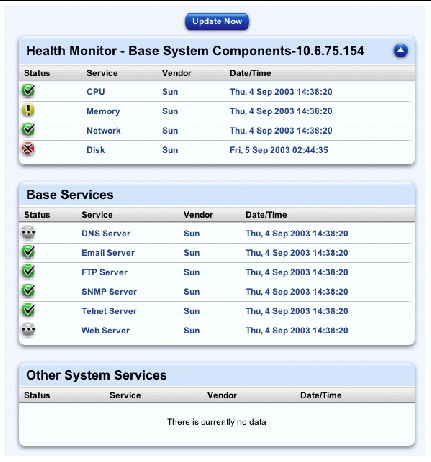

When you click a magnifying glass icon to see more detailed information for a host, three tables appear:

|

Note - To add a new Health Monitoring service, see Adding New Services to the Health Monitoring Module. |

To view a summary of the health monitor data on a managed host:

1. Select Health Monitor  Health Summary.

Health Summary.

The Critical Events and Managed Host Group Status tables appear.

2. To view more detailed information for a critical event, click the magnifying glass icon next to the item in the Action column.

The following information tables appear (see FIGURE 2).

Click the up arrow icon in the top right corner to return to the previous screen.

3. If you view the details for a group of managed hosts, the Managed Hosts State table appears, listing the hosts belonging to that group.

You can click on the magnifying glass icon next to the host in the Action column. The same three information tables then appear.

Click the up arrow icon in the top right corner to return to the previous screen.

Above the Critical Events table is a Refresh button. This button causes the UI frame to update immediately to reflect the most current data in the database.

This button does not update the database with new information from the managed hosts. To update the information in the database, see Updating Health Status Data.

The services monitored on managed hosts include:

When a critical event occurs on a managed host, the event appears in the Critical Events table. If you decide not to deal with a given critical event, you can clear this event from the table. The problem is still present on the managed host, but there will be no further notification concerning this critical event in the Critical Events table.

|

Note - If a critical event for a different problem occurs on this same managed host, a new critical event displays in the table. |

To clear a particular critical event from the Critical Events table or to clear all critical events:

1. Select Health Monitor  Health Summary.

Health Summary.

The Critical Events and Managed Host Group Status tables appear.

2. To clear a particular critical event from the table, click the delete icon next to the event in the Action column.

The Critical Events table refreshes with that critical event removed from the table.

3. To clear all critical events from the table, click Clear Critical Events above the table.

The Critical Events table refreshes with no entries.

You can refresh the health status data for each host. This feature causes the Control Station to retrieve immediately the most recent health status data from a host.

The Update Now button appears in the UI when you are viewing the detailed information tables for an individual host. To refresh the health status data on a managed host:

1. Select Health Monitor  Health Summary.

Health Summary.

The Critical Events and Managed Host Group Status tables appear.

2. Click the magnifying glass icon next to the item in the Action column.

The detailed tables of information appear.

3. If you view the details for a critical event, the following information tables appear:

4. If you view the details for a group of managed hosts, the Managed Hosts State table appears, listing the hosts belonging to that group.

You can click on the magnifying glass icon next to the host in the Action column. The same three information tables then appear.

5. On the screen showing the detailed information tables for a host, click Update Now above the table.

This forces the Control Station to retrieve immediately the health data from the managed host.

The Task Progress dialog appears.

6. Click the up arrow icon in the top right corner to return to the previous screens.

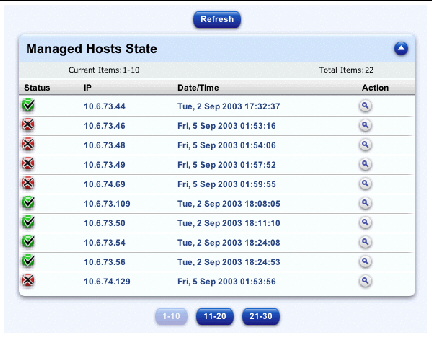

To view the overall health for each of the managed hosts in one table:

1. Select Health Monitor  View Hosts.

View Hosts.

The Managed Hosts State table appears, displaying the list of managed hosts (see FIGURE 3).

2. To view more details for an individual host, click the magnifying glass icon next to the host in the Action column.

The following information tables appear:

Click the up arrow icon in the top right corner to return to the previous screen.

3. On the screen showing the detailed information tables for a host, click Update Now.

This forces the Control Station to retrieve immediately the health data from the managed host.

The Task Progress dialog appears.

4. Click the up arrow icon in the top right corner to return to the previous screen.

This button, located above the Managed Hosts State table, causes the interface to update immediately to reflect the most current data in the database.

This button does not update the database with new information from the managed hosts.

This feature allows the Control Station to verify that the agent is running on a managed host, and that the host can be accessed over the network. It works in the following way:

1. The Control Station sends a simple agent request.

If this request is successful, the agent is functioning normally and the host can be accessed over the network. The status of the network component in the Base System Components table is green.

If this agent request is not successful, the status of the network component changes to red; see FIGURE 2 for an example.

2. The host with the "failed" agent is then pinged through an Internet Control Message Protocol (ICMP) ping to verify network connectivity.

If this ICMP ping is successful, the health-monitoring information table in the database records that the Control Station cannot access the agent on the host IP address.

If this ICMP ping is not successful, the table records that the Control Station cannot access the host IP address over the network.

The Status Polling Interval indicates when a polling cycle begins (for example, every four hours) for retrieving the health data from the managed hosts.

When setting this interval, you need to take into account the number of hosts managed by the Control Station. The managed hosts are polled serially. When the Control Station encounters an unreachable host (including Sun Control Station agent failures), the time-out period for polling this host is 10 minutes.

If the Control Station encounters a number of unreachable hosts during a polling cycle, a given cycle may not complete before the start of the following polling cycle.

The minimum Status Polling Interval is one hour. If a Sun Control Station is managing many hosts, you should set a longer interval.

Alive and Status Polling settings you can configure include:

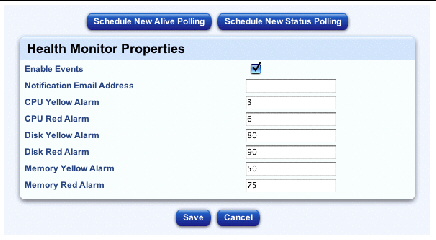

You can configure the following parameters:

Events come into the Control Station on port 80.

This feature does not affect the events that are detected during a polling interval.

You can enter only one email address in this field.

For example, a value of 80 means that a yellow alarm is generated when 80% of the capacity of the hard disk drive is used.

For example, a value of 90 means that a red alarm is generated when 90% of the capacity of the hard disk drive is used.

For example, a value of 50 means that a yellow alarm is generated when 50% of the memory is in use.

For example, a value of 75 means that a red alarm is generated when 75% of the memory is in use.

To configure the settings for the Health Monitoring control module:

1. Select Health Monitor  Settings.

Settings.

The Health Monitor Properties table appears (see FIGURE 4).

For a list of settings you can change, see Health Monitor Settings You Can Configure.

The Health Monitor Properties table is refreshed.

To schedule a new Alive Polling task:

1. Select Health Monitor  Settings.

Settings.

The Health Monitor Properties table appears.

2. Click Schedule New Alive Polling.

This button is located above the table. The Schedule Settings For Alive Polling table appears.

For a list of Alive Polling settings, see Alive and Status Polling Settings.

5. In the Scheduled Tasks table, you can view details for, modify, or delete a scheduled task.

To schedule a new Status Polling task:

1. Select Health Monitor  Settings.

Settings.

The Health Monitor Properties table appears.

2. Click Schedule New Status Polling.

This button is located above the table. The Schedule Settings For Status Polling table appears.

For a list of settings you can change, see Alive and Status Polling Settings.

5. In the Scheduled Tasks table, view details for, modify, or delete a scheduled task.

The Health Monitoring module allows you to incorporate customized scripts to execute and monitor. A script is executed and, based on the results, may send an event that causes an alarm or critical event on the Sun Control Station. The specific information associated with the event is presented in the Other Services table in the detailed information screen. Clearing the Critical Event table resets the alarms.

To make it easy to customize the Health Monitoring module, the module uses a configuration file to specify details on the customized scripts. From this configuration file, the Health Monitoring daemon acquires the name of the monitor, description, program to run, and the text for each of the states that the program will supply.

The states are 0, 1, 2 or 3; they correspond to the criticality of the problem and thus to the color and icon of the state presented in the Health Monitoring tables. The states are defined as:

The format of the configuration file is as follows:

Example: /usr/mgmt/bin/cobalt_db.pl

Example: Monitors the database

Example: The database server is not monitored/state unavailable.

Example: The database server is online.

Example: The database server is in limbo.

Example: The database server is offline.

The program specified in the configuration file is required to return a numeric value of 0, 1, 2 or 3. When the Health Monitoring daemon runs a polling pass (approximately every 10 minutes), the program specified in the configuration file is executed.

The results (a value of 0, 1, 2 or 3) are captured and stored after the program is executed for the first time. From that point on, each time the Health Monitoring daemon runs, the results are compared to the previous results. If the results are different, an event is generated and sent to the Control Station. The event contains the state, message associated with the state, name, version, and description of the service. If a yellow or red state is returned, a critical event is generated on the Control Station and a Status Alert is generated in the top left corner of the UI.

The configuration file must be placed in the /usr/mgmt/etc/hmd directory and the monitor script in the /usr/mgmt/bin directory.

Include these steps in the install script so that, during installation, the files are placed in the correct directories and the daemon is restarted.

To create a new Health Monitor service:

1. Create the configuration file with the various settings for the new service.

Name the configuration filename.conf (for example, monitor_db.conf). All of the configuration files are placed in the /usr/mgmt/etc/hmd directory.

This is what a sample configuration file would look like:

version 1.0

program /usr/mgmt/bin/monitor_db.pl

vendor Sun

interval 10

name Database

description Monitors the database.

state0msg The database server is not monitored/state unavailable.

state1msg The database server is running.

state2msg The database server is in limbo.

state3msg The database server is not running.

2. Create a script to monitor the new service (the program setting in the configuration file).

All of these monitor scripts are placed in the /usr/mgmt/bin directory.

For example, the monitor script for the service Database Check (monitor_db.pl) would look like:

#!/usr/bin/perl -w

# This script return whether mysql db is running## return values:# Disabled/No info: 0# Running: 1# Not Running: 3

use strict;use lib '/scs/lib/perl5';use SysCmd;

if (system("/bin/ps -ef | /bin/grep mysqld | /bin/grep -v grep")){ exit(3);}else { exit(1);}

3. In the install script, include the following directive specifically for the new Health Monitor service.

Copy the configuration file and the monitor script to the correct locations.

echo "Copying script to /usr/mgmt/bin " >> $LOG

cp /YourDirectory/patches/monitor_db.pl /usr/mgmt/bin/

echo "Copying config file to /usr/mgmt/etc/hmd " >> $LOG

cp /YourDirectory /patches/monitor_db.conf /usr/mgmt/etc/hmd/

4. Create a package file for each type of host on which you want to install this new Health Monitor service.

5. Upload the package to the Control Station using the Software Installer module. Use Software Installer module either to publish the package or to install it on selected hosts.

For more information, refer to the PDF Sun Control Station 2.2 Software Installer Module.

Copyright © 2004, Sun Microsystems, Inc. All Rights Reserved.