| C H A P T E R 3 |

|

Configuring the Clustered Database Platform |

This chapter covers the configuration and setup of the hardware and preinstalled software on the Clustered Database Platform 280/3 system, which includes the following topics:

Before performing the steps in this chapter, you must have already completed the following:

Refer to the clustered platform documentation that came with the system for instructions on how to perform these tasks.

|

Note - The software installation and configuration instructions in this guide supersede equivalent instructions in the other manuals you might have received. For the latest and most current information on this clustered platform, refer to the Clustered Database Platform 280/3 With Oracle9i Database Real Application Clusters, Late-Breaking News document. See Clustered Database Platform Documentation for instructions on locating this document. |

Use FIGURE 3-1 to record site-specific information that is needed to complete the procedures in this guide. Names and addresses shown in the center column represent the examples used in this guide. Assign names and addresses (routed or non-routed) that are appropriate for your site.

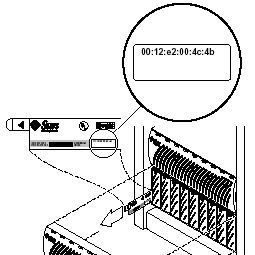

FIGURE 3-1 shows the location of the Ethernet address for the arrays.

When your system is shipped from the factory, the management server is pre-loaded with all of the necessary software to install the Solaris operating environment and Sun Cluster software on the cluster nodes. You must configure the terminal concentrator first.

|

Caution - You must enter the correct parameters during the initial configuration, or the system will not configure properly. |

Because the Cluster Platform does not have a monitor, it is only accessible from another system that you provide (referred to as the local system in this guide). The local system is simply used as a remote display to the management server, and it must be connected to the same network as the Cluster Platform. You do not need to permanently dedicate any particular system as the local system. It can be any system that is convenient at the time. For the first several steps, however, the local system needs to be in close proximity to the Cluster Platform (for connecting a temporary cable connection for tip) and it should be running Solaris (or use a laptop as described in Appendix B).

1. Power on the expansion cabinet power sequencers, and then power on all individual system components, except for the Sun StorEdge T3 disk arrays.

|

Caution - Do not power on the Sun StorEdge T3 disk arrays until instructed to do so on "Invalid Cross-Reference Format". |

2. Provide console connectivity from the local system to the terminal concentrator:

|

Note - The initial access to the terminal concentrator is performed using the tip command through a serial port of the local system to Port 1 of the terminal concentrator. |

a. Disconnect the serial cable (part no. 530-9524) from Port 1 of the terminal concentrator.

b. Connect the RJ-45 end of the serial cable (part no. 530-2151) to Port 1 of the terminal concentrator and the other end, DB-25 male, to serial port B of your

local system.

3. From a terminal window on the local system, type the following command:

# /usr/bin/tip hardwire |

The tip(1M) command connects the local system I/O to the terminal concentrator I/O during an interactive session.

|

Note - If the port is busy, see Appendix C for information on configuring another port for a tip connection. |

4. Configure the terminal concentrator:

The terminal concentrator undergoes a series of diagnostics tests that take approximately 60 seconds to complete.

Following the diagnostics tests, the tip window of the local system displays:

System Reset - Entering Monitor Mode monitor:: |

5. Configure the network addressing information for the terminal concentrator.

Use the addr, addr -d, and sequence commands to modify and verify the network configuration of the terminal concentrator. Refer to your completed worksheet for the network address information.

In the following example, replace the addresses shown in italics with the appropriate addresses for your network environment.

6. Terminate your tip session by entering ~. (tilde and period).

monitor:: ~. |

7. Power cycle the terminal concentrator to apply the IP address changes and wait at least two minutes for the terminal concentrator to activate its network.

8. Return the system to the factory cable configuration:

a. Remove the cable that you connected for the earlier tip step.

Disconnect the serial cable (part no. 530-2151) from port 1 of the terminal concentrator and from the local system.

b. Reconnect the serial cable (part no. 530-9524) to Port 1 of the terminal concentrator.

|

Note - At this time, the cluster configuration should be cabled as originally shipped from the factory. |

9. From the local system, type the following command to verify that the terminal concentrator responds to the new IP address:

# /usr/sbin/ping 192.168.0.2 192.168.0.2 is alive |

The local system must be connected to the same network to which the terminal concentrator was configured.

10. Access the terminal concentrator using the telnet command:

11. Edit the terminal concentrator config.annex file:

annex# edit config.annex |

The terminal concentrator opens an editing session for the config.annex file.

12. Type the following information into the config.annex file. Replace 198.168.0.248 with the appropriate default router address for the management server in your network.

% gateway net default gateway 198.168.0.248 metric 1 hardwired Ctrl-W: save and exit Ctrl-X: exit Ctrl-F: page down Ctrl-B: page up |

13. Press Ctrl+W to save the changes and exit the config.annex file.

14. Enable access to all ports.

15. Reboot the terminal concentrator.

annex#: boot bootfile: <CR> warning: <CR> *** Annex (192.168.0.2) shutdown message from port v1 *** Annex (192.168.0.2) going down IMMEDIATELY |

After 90 seconds, the terminal concentrator and all ports will be accessible from outside the subnet. Use the /usr/sbin/ping 192.168.0.2 command to determine when the terminal concentrator is ready to be used.

This section contains the procedure for configuring the management server. You must perform the steps exactly as they appear.

When executing commands on the management server from the local system, verify that the DISPLAY shell environment variable (on the management server) is set to the IP address of the local system (local host).

|

Caution - On the local system, you must set and export the TERM environment variable to a value that emulates the kind of terminal you are using. This setting should also correlate with the terminal emulation you choose on "Invalid Cross-Reference Format" in Step 7. If this is not done, the text might not display properly on the screen, nor match what is in the examples in this guide. |

If the TERM value is not set properly, the screen text might display garbled and prevent you from interacting with the installation script after you boot the management server. If this occurs, you must stop the installation and start over.

To stop the installation and start over, perform the following steps:

1. In the telnet window of your local system, press Ctrl+], then type send brk to take the management server to the OpenBoot PROM prompt.

2. At the OpenBoot PROM prompt, boot the management server to single-user mode.

3. Execute the sys-unconfig(1M) command to remove previously defined system parameters.

4. Press Y to confirm the sys-unconfig(1M) questions.

5. Restart the installation of the management server by using the following command:

ok boot disk -s # sys-unconfig |

In the following steps, replace the italicized examples with the appropriate names and addresses from the worksheet.

1. From your local system, access the terminal concentrator:

Telnet to the terminal concentrator, and select Port 1. The following steps will assist you in the configuration of the management server; at the conclusion of the steps, the management server will reboot, and you will be asked a series of questions to configure the cluster.

The following are the port designations:

# telnet 192.168.0.2 Trying 192.168.0.2... Connected to 192.168.0.2. Escape character is '^]' <CR> Rotaries Defined: cli Enter Annex port name or number: 1 |

2. Press Ctrl+] and type send brk at the telnet prompt to make sure that the management server is at the "ok" OpenBoot PROM prompt.

3. Set auto-boot? to true from the OpenBoot PROM prompt as follows:

ok setenv auto-boot? true |

4. Boot the management server from the OpenBoot PROM prompt to start the configuration process.

The management server boots and begins asking you to define information that is specific to your site.

|

Caution - The following steps provide critical information for configuring the cluster platform. Use the information you collected in the worksheet. |

Selection screens have two options, escape sequences or:

F2_Continue F6_Help |

Confirmation screens have three options, escape sequences or:

F2_Continue F4_Change F6_Help |

The only exception to these rules is the Time Zone selection screen, which has a Cancel option:

F2_Continue F5_Cancel F6_Help |

7. Select the appropriate terminal emulation:

Choose one of the terminal types from the list that best emulates the kind of terminal you are using on the local system.

|

Caution - Some of the following terminal types cause unpredictable results. The procedures in this book contain code examples based on the DEC VT100 terminal type. |

The terminal selection may affect the output displayed during the configuration process. It also may not match the examples in this document. After you select the terminal emulation, network connectivity is acknowledged.

8. Select Yes to the network connectivity question.

The eri0 interface on the management server is intended for connectivity to the administration network.

9. Deselect Dynamic Host Configuration Protocol (DHCP) services.

Because the management server must have a fixed IP address and name recognized by outside clients, DHCP is not supported for this function:.

10. Select the primary network interface.

The management server configuration uses eri0 as the default primary network interface. This is the only interface you should configure at this time.

11. Define the host name of the management server.

12. Type the IP address for the eri0 port of the management server.

13. Select a subnet membership.

The default configuration is to provide network connectivity on a subnetted network.

Currently, only version 4 of the IP software is supported. Verify that IPv6 support is disabled.

16. Confirm the site-specific information for the management server:

17. Deselect Kerberos security.

Only standard UNIX security is currently supported.

18. Confirm your selection for Kerberos security.

Verify that Kerberos security is not configured.

19. Select None for the name service menu.

For this function, you must select None. After the management server and cluster nodes are configured, you can manually set up a name service.

20. Confirm the information for the name service.

24. Confirm the date and time, and time zone information.

25. Create a secure root password for the management server.

The system reboots, and the cluster environment customization starts. After the system customization is completed, the management server installs the Solstice DiskSuite software and configures itself as an installation server for the cluster nodes.

1. Confirm (or deny) that the management server information you entered in the previous procedure is correct.

Before the cluster environment customization script starts, you are given the chance to decide if you want to continue, or if you want to re-enter the information from the previous procedure.

Based on your response to this question, take the following action:

2. Specify the default router address for the cluster:

Enter the Management Server's Default Router (Gateway) IP Address... 198.168.0.248 |

3. Specify the terminal concentrator name and enter the IP address:

Enter the Terminal Concentrator Name...TC Enter the Terminal Concentrator's IP Address...192.168.0.2 |

4. Enter the following cluster environment names and addresses.

5. Enter the internal administration network addresses.

6. Enter the T3 array names and internal network addresses.

You can accept the default values if they do not conflict with existing addresses in your network environment.

7. Specify the network address of the private interconnect:

|

Note - For the range of recommended private addresses, refer to Section 3 of the Request for Comments (RFC) 1918 Internet standard from the Internet Engineering Task Force (IETF). |

8. Specify the netmask address of the private interconnect:

Is it okay to accept the default netmask [255.255.0.0] (y/n) y |

|

Caution - You must press Y to accept the default netmask. Do not press Return. If you press Return, unpredictable behavior may occur. |

9. Confirm the assigned names and addresses:

10. When prompted, choose one of the following volume manager products:

|

Caution - During a recovery, do not power cycle the Sun StorEdge T3 arrays as mentioned in Step 1. Instead, proceed to Step 2. |

1. Power on the Sun StorEdge T3 disk arrays.

2. Press Return when the Sun StorEdge T3 disk arrays are finished booting.

Refer to the Sun Management Center 3.0 Installation Guide for additional details.

1. Choose to set up, or not set up the Sun Management Center (Sun MC) software:

- Do you want to setup Sun MC 3.0 (y/n) y |

2. Answer the Sun Management Center installation questions as shown in the following example:

3. Specify whether you want to install the Sun Management Center Sun Fire 15K administration module.

4. Specify whether you want to install the Sun Management Center Sun Fire 15K system controllers administration module.

5. Specify whether you want to install the Sun Fire 6800/4810/4800/3800 administration module.

6. Press Y to install the Sun Management Center Netra t administration module for monitoring the management server and Sun StorEdge T3 arrays.

7. Press Y to monitor the T3 arrays.

Do you want to setup T3 module [y|n|q] y |

8. Press 3 to select the Add managed T3 routine.

Selecting 3 causes the script to prompt you with T3 setup questions.

9. Select 1 to display the list of available T3 arrays.

[1] add T3 from available T3 list. [2] add new T3 [3] return to the main menu Please press [1-3] 1 |

10. Press the line number of the first T3 array.

|

Note - Do not select any line numbers associated with the nodes. |

Available T3: Name IP Address 1 node1 10.0.0.2 2 node2 10.0.0.3 3 T3-01 10.0.0.4 4 T3-02 10.0.0.5 Add available T3 [1-4] 3 |

11. Type the root password for the selected T3 array, and press Return.

Input root password of T3-01:abc Check SunMC token files... Check SunMC token files success. Check logging status... Check logging status success. Press Enter to return: <CR> |

12. Press 3 to configure Sun Management support for the second T3 array:

13. Press 1 to display the list of available arrays:

[1] add T3 from available T3 list. [2] add new T3 [3] return to the main menu Please press [1-3] 1 |

14. Press the line number of the second T3 array:

|

Note - Do not select any line numbers associated with the nodes. |

Available T3: Name IP Address 1 node1 10.0.0.2 2 node2 10.0.0.3 3 T3-02 10.0.0.5 Add available T3 [1-3] 3 |

15. Type the root password for this T3 array, and press Return.

Input root password of T3-02:abc Check SunMC token files... Check SunMC token files success. Check logging status... Check logging status success. Press ENTER to return: <CR> |

16. Press 6 to exit the T3 module setup program.

The Sun Management Center software will install the module that supports monitoring of T3 arrays.

17. Specify whether you want to install the Sun Management Center CP2000 administration module.

18. If you receive the following question, specify whether you want to install the Sun Management Center CP2000/CP1500 server module.

19. Press N to skip starting the Sun MC components.

1. Press N to install the Oracle9i RAC software.

Do you want to install Oracle9i HA (y/n) n Oracle9i RAC and Volume Manager 3.1.1 will be installed. |

The Oracle software license will be printed.

2. When prompted, press Return to review the Oracle license terms.

3. Press Y to agree to the Oracle license terms.

|

Note - When executing the ccp(1M) command remotely, you must ensure that the DISPLAY shell environment variable is set to the IP address of the local host. |

1. Type the command /user/openwin/bin/xhost 192.168.0.1 (the administration IP address of the management server) to enable your windows manager to display screens from the management server.

2. Log in to the management server as the superuser (root).

3. Set and export the DISPLAY shell environment variable to the IP address of the local host:

# DISPLAY=local_host_IP_address:0.0; export DISPLAY |

4. Launch the Cluster Control Panel:

# ccp $CLUSTER & |

This command displays the Cluster Control Panel (FIGURE 3-3).

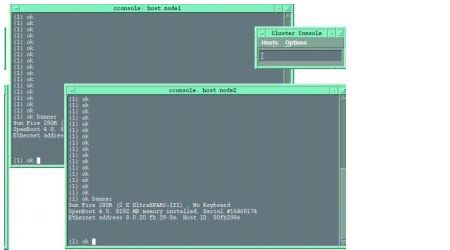

5. In the Cluster Control Panel window, double-click on the cconsole icon to display a Cluster Console window for each cluster node (FIGURE 3-4).

To type text into both node windows simultaneously, click the cursor in the Cluster Console window and type the text. The text does not display in the Cluster Console window. This ensures that both nodes execute the commands simultaneously.

6. In the Cluster Console window, type the following command into both nodes simultaneously:

boot net - install |

|

boot net - You must use spaces before and after the hyphen (-) character in the

|

The following software is automatically installed on both cluster nodes:

All patches are applied and system files are configured to produce a basic cluster environment. In addition, the Oracle 9i Database RAC software is configured and a sample database is set up.

You may see the following error:

Boot device: /pci@8,700000/network@5,1: File and args: Timeout waiting for ARP/RARP packet Timeout waiting for ARP/RARP packet Timeout waiting for ARP/RARP packet . . . |

If so, it is likely that you need to correct one of the following:

|

Note - Press Ctrl+] and send brk to stop the Timeout waiting for ARP/RARP packet errors. |

After you execute the boot(1M) command, you should see output as the management server installs and configures the Oracle software. Refer to for an example of the output.

7. Take one of the following actions based on the volume manager product you chose:

8. Log into each cluster node as the superuser (password is abc), and change the default password to a secure password:

# passwd passwd: Changing password for root New password: secure-password-choice Re-enter new password: secure-password-choice |

9. Install the Sun Management Center agent.

For instructions, refer to the Sun Management Center documentation.

10. Configure the Sun StorEdge T3 array shared disk storage.

Configure the T3 array volume configuration if you do not plan to use the default T3 configuration. Refer to the T3 documentation that shipped with your Clustered Platform.

Configure the storage using Solstice DiskSuite, then create disk sets, metadevices, and file systems that suit your needs. Refer to the Solstice DiskSuite documentation.

11. Proceed to Finishing Up.

|

Note - You will not see these questions if you chose to install Solstice DiskSuite. |

You must have two valid VERITAS license keys (one for each node) to complete this procedure.

1. In the Cluster Console window (cconsole) choose to encapsulate the root disk, and type the pathname of the Jumpstart program:

Do you want Volume Manager to encapsulate root [yes]? yes Where is the Volume Manager cdrom? /net/ManagmentServer-ip-address/jumpstart/Packages/VM3.1.1 |

2. In each individual node window, enter the license key for that node (obtained from VERITAS) to register your version of VERITAS Volume Manager:

|

Caution - You must enter valid license keys. If the script detects an error in the license key, the script will not complete successfully. |

3. In the Cluster Console window, wait for the installer script to display the login prompt:

4. In each individual node window, log in to each cluster node as a superuser (password is abc) and change the default password to a secure password:

node1 console login: root Password: abc # passwd passwd: Changing password for root New password: secure-password-choice Re-enter new password: secure-password-choice |

node2 console login: root Password: abc # passwd passwd: Changing password for root New password: secure-password-choice Re-enter new password: secure-password-choice |

5. Install the Sun Management Center agent on the cluster nodes.

Installing the Sun Management Center agent enables Sun Management Center to monitor the nodes. For installation instructions, refer to the Sun Management Center 3.0 Installation Guide.

6. Configure the Sun StorEdge T3 array shared disk storage.

Configure the storage using VERITAS Volume Manager. Configure the volumes and file systems to suit your needs. Refer to the VERITAS Volume Manager documentation.

7. Proceed to Finishing Up.

You need to perform additional steps depending on your environment. This section contains references to additional steps that may be needed.

1. Install and configure your highly available application(s) for the cluster environment.

2. Establish network automatic failover (NAFO), resource groups, logical hosts, and data services to enable your application(s) under the Sun Cluster 3.0 infrastructure. Refer to the Sun Cluster 3.0 documentation. The path to the Sun Cluster data services is:

/net/sc3sconf1-ms/jumpstart/Packages/SC3.0u1/scdataservices_3_0_ul |

3. Optionally, enable the Oracle GUI configuration assistant to access the nodes.

cluster_node1_name oracle cluster_node2_name oracle node_private_interconnect_IP_1 oracle node_private_interconnect_IP_2 oracle |

|

Note - You can run /usr/sbin/ifconfig -a on a node to determine the private interconnect IP addresses. |

4. Place the Oracle database into archive log mode.

5. Determine and set up your backup strategy and methods.

You are now done installing and configuring the Clustered Database Platform software.

This section contains information about what has been installed and configured on the cluster nodes and what you can do with the software.

After the management server finishes installing the software on the nodes, you must update the /etc/hosts file so that it corresponds to the cluster platform network configuration.

1. On the management server, use a text editor to open the /etc/hosts file.

2. Delete the first two occurrences of the -admin text string (shown highlighted in the following example):

3. Append -admin to the two internal administration node names (shown highlighted in the following example):

4. Save your changes and quit the editing session.

For security reasons, when the installation and setup of the cluster platform is complete, remove the root user /.rhosts file on each cluster node to prevent unauthorized access to the nodes. This file is not typically needed after the cluster installation is complete. However, some cluster agents may require the root user to have remote access to the cluster nodes. Refer to the agent documentation for more details.

The following packages are installed on the cluster nodes:

The starter database is configured with the default Oracle passwords. For the sys user, the password is change_on_install. For the system user, the password is manager. You should change these passwords by using the alter user command in SQL*Plus.

You should place each starter database provide with the Clustered Database Platform into archive log mode. You must choose a location to which the redo log files may be copied and to specify it in the server parameter file. For Oracle9i RAC, you must set it up on each instance.

You should choose a backup method to be performed on a regular basis, such as offline or online. You must provide the scripts. If you choose not to place the database into archive log mode, then only offline backups can be performed. An offline backup involves a shutdown of the instance, which means that the recovered data would only reflect the last offline backup. For more information on these topics or to read about Oracle Recovery Manager, refer to your Oracle software documentation.

The RAC redo log files are not set up in redo log groups. You can, however, create the redo log groups and add the RAC redo log files so that each group acts as mirrors for redundancy. Use the ALTER DATABASE ADD LOGFILE MEMBER command to add the RAC redo log files to the groups.

If you add the RAC redo files and the database is placed into archive log mode, the Archiver background process can take advantage of the multiple copies to perform pre-fetching of redo log blocks from each group member in a round-robin fashion. For more information, refer to your Oracle software documentation, as well as Doc. ID 45042.1 on http://metalink.oracle.com. (You must be registered to use this site.)

You can access operation instructions in the Clustered Database Platform 280/3 system documentation and the Oracle documentation (see Clustered Database Platform Documentation).

Copyright © 2002, Sun Microsystems, Inc. All rights reserved.